Dynamic Rendering of Neural Implicit Representations in VR

Extract time series geometry and color information from SDF-based models and realize real-time rendering of dynamic meshes in VR

This is a course project in Mixed Reality course at ETH. The live demo and poster session (for all projects in this course) was on 2023 Dec.18, 10:15 - 13:00 in HG EO-Nord. If you happened to be there, you should have experienced our demo on real devices.

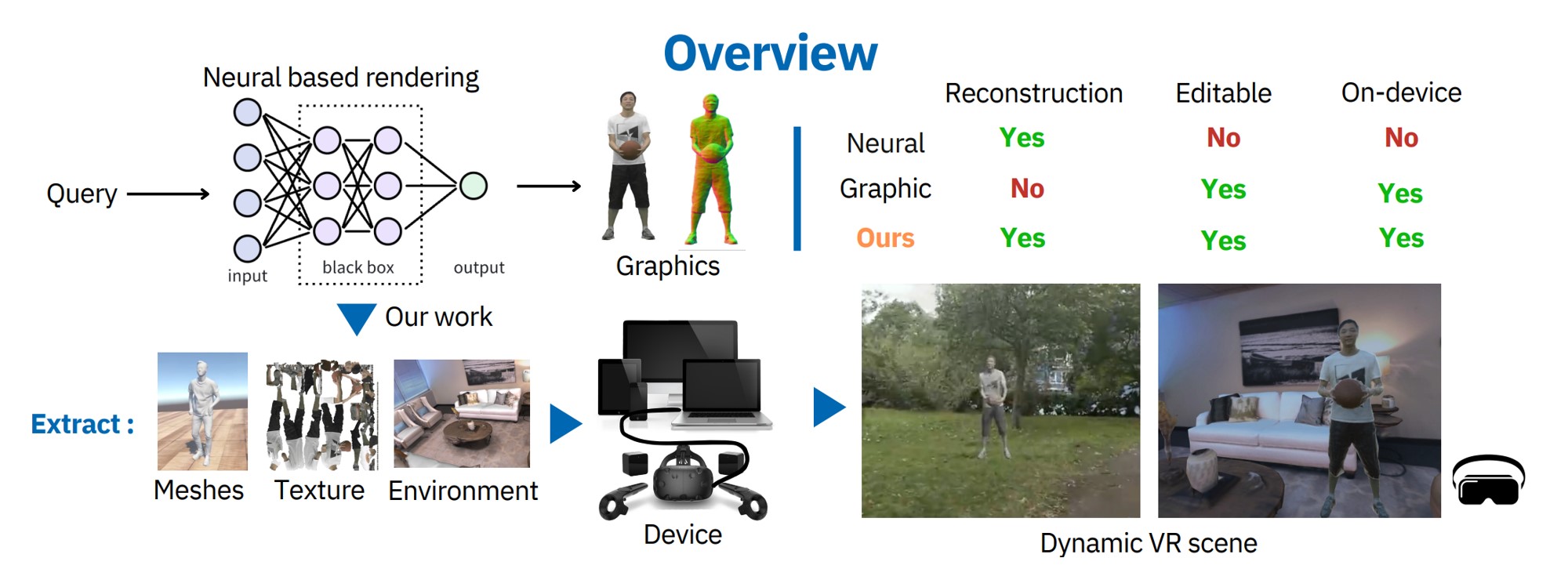

Overview

This project aims to bridge the gap between neural reconstruction and real-time rendering on mobile devices.

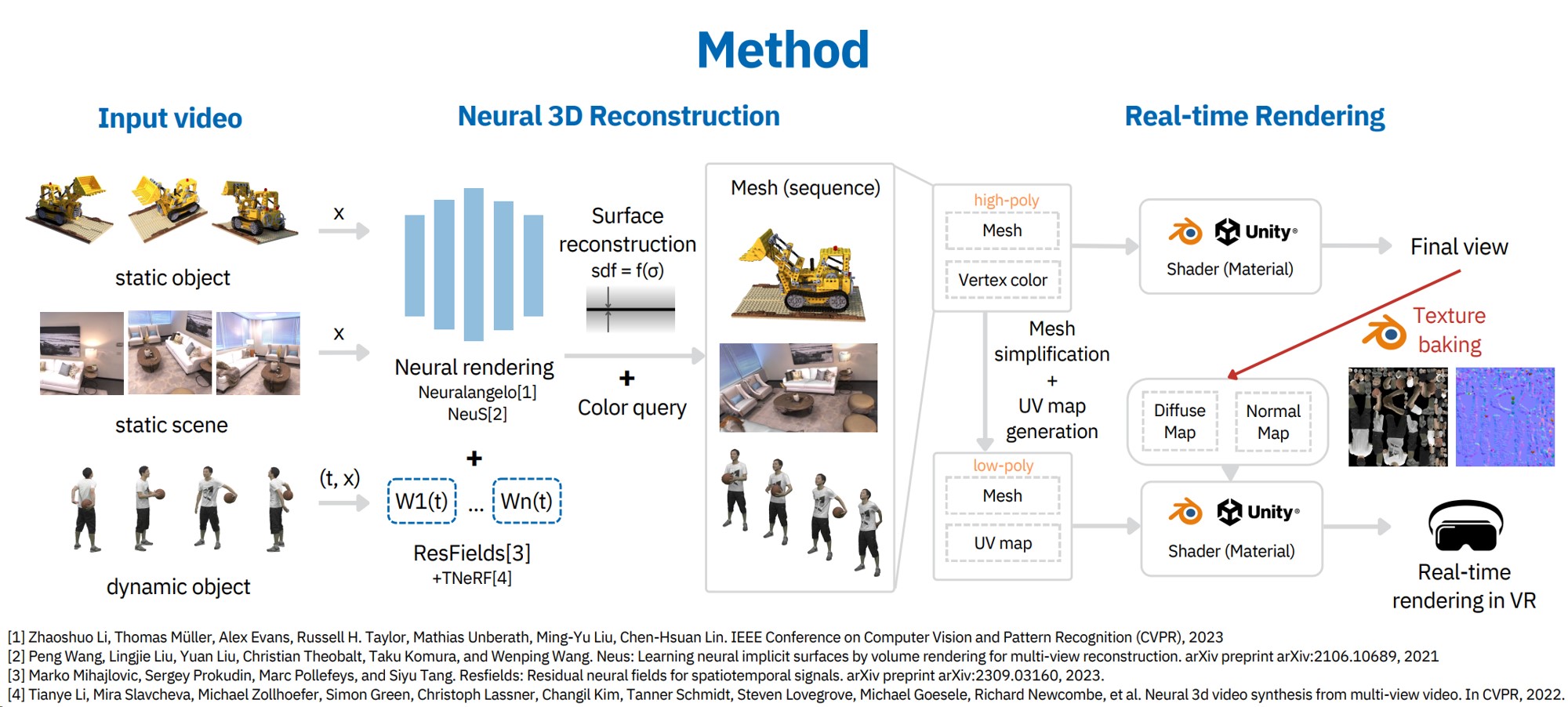

Algorithm

We perform neural reconstruction for multiple objects(including background, static, and dynamic). The extracted meshes (and mesh sequences for dynamic objects) with vertex colors are simplified and we performed texture baking for the simplified meshes.

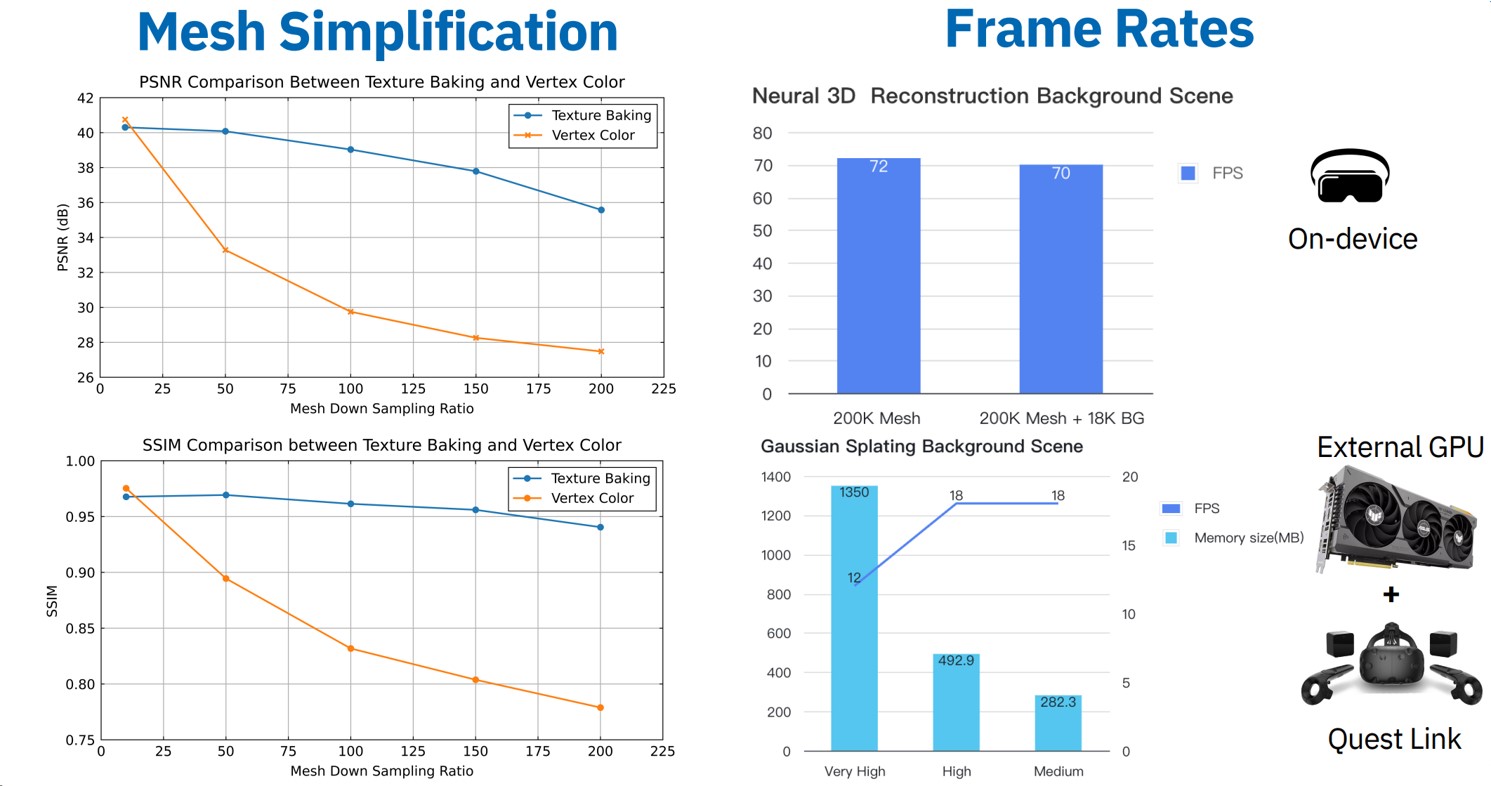

Quantitative Results

Texture baking preserves more details on the original mesh than directly using the vertex color. This can significantly simplify our scene complexity while keeping the rendering effort for each frame manageable. The final scene consists of multiple reconstructed objects and reaches 72 FPS for real-time on-device rendering on Oculus Quest 2. In contrast, directly rendering the scenes from neural reconstruction methods (we use Gaussian splatting here) gives less than 20 FPS even with the help of an external GPU.