FPGA Acceleration for Storage Deduplication

A transparent FPGA-based layer between the CPU and storage system. It is dedicated to storage deduplication.

In Short

Traditional deduplication systems use FPGA as an offload engine and maintain a hash table on the host side. Those designs need extra memory copy and long-latency communications between the host and FPGA. Both affect the performance of the whole system.

To overcome this limitation, our new system integrates all logic related to page deduplication to FPGA. No extra memory copy and communication are needed in our system. Furthermore, the system is implemented entirely in hardware, delivering 12.7GB/s(100Gbps) throughput and < 30us latency(10x faster than previous work). This project was carried out at Systems Group, ETH.

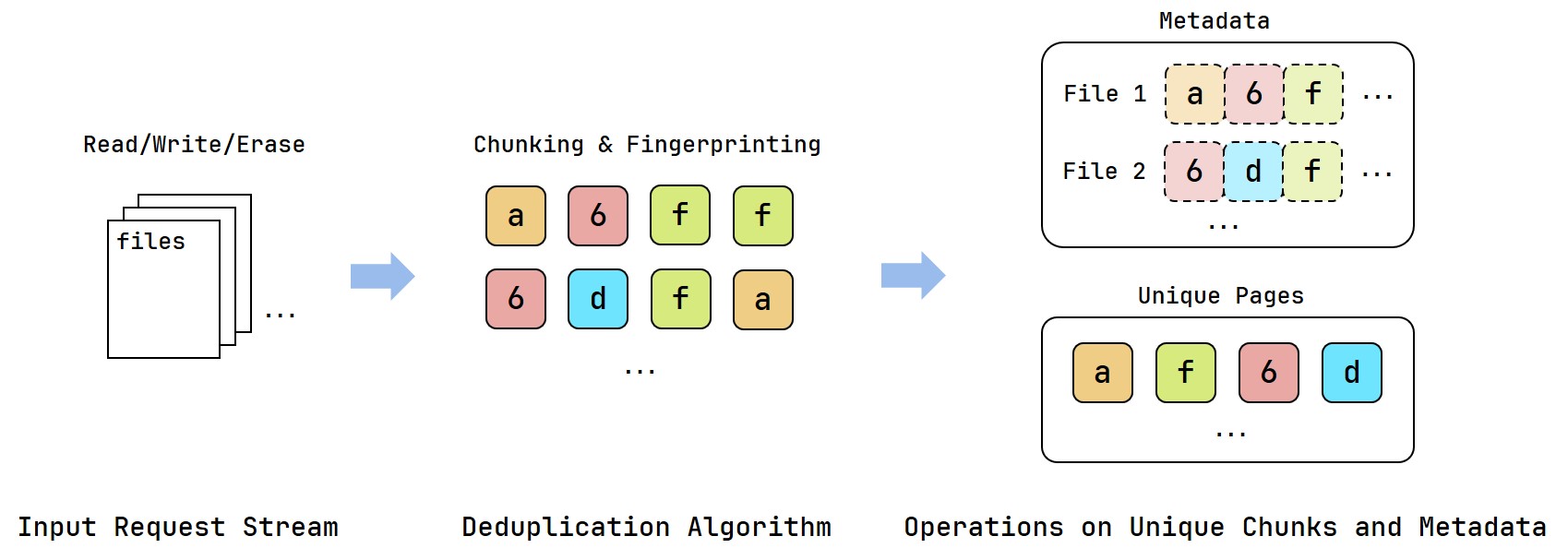

Deduplication: Algorithm

The deduplication system chunks the input and then identifies the unique chunks by fingerprinting them using a cryptographically secure hash(typically MD5 or SHA). The system maintains metadata for the correspondence between the user pages and unique pages. Only unique pages are stored in SSD to lower the write operation and extend the SSD lifetime.

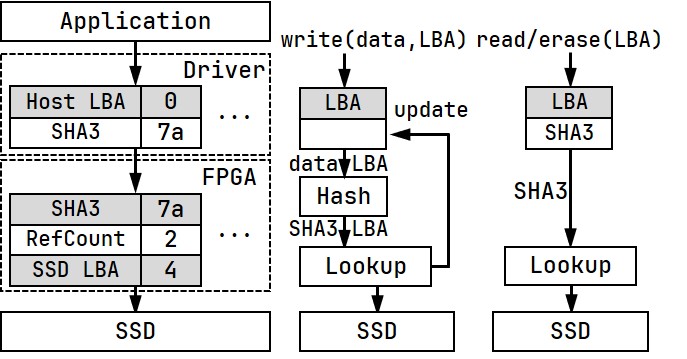

System Setup

Our whole system contains the driver and FPGA logic. The system is a transparent layer inserted between the CPU and SSD. Neither is aware of this layer, which handles everything related to page deduplication: chunking, fingerprinting, metadata management, and garbage collection.

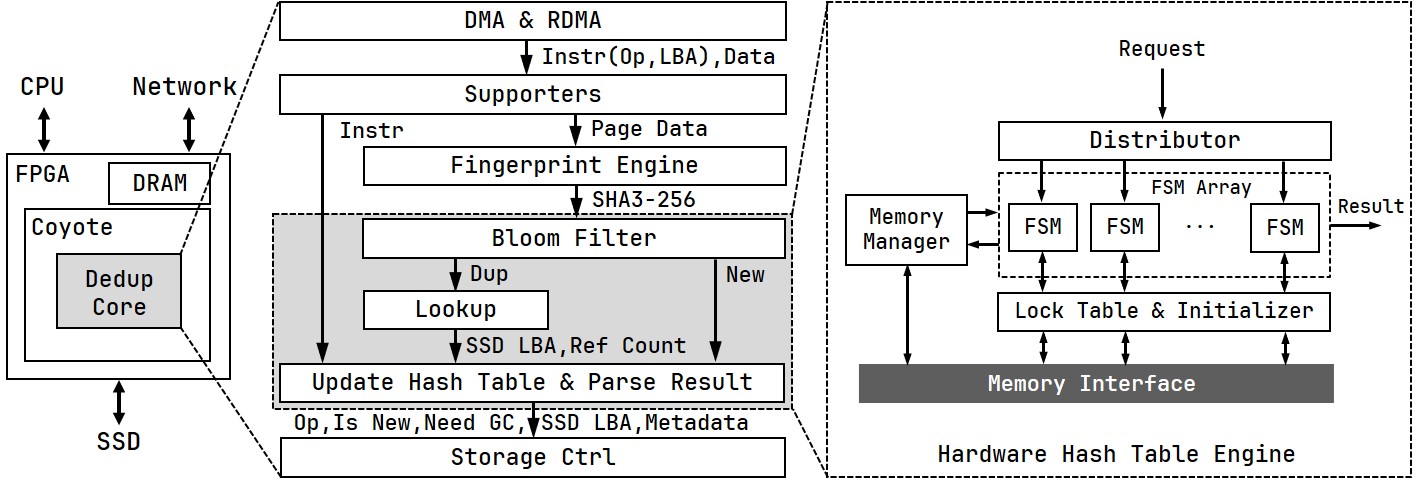

Hardware Architecture

On the hardware side, the deduplication logic, DedupCore, is built on top of the FPGA infrastructure Coyote, which provides DMA and RDMA access to the system. A hardware hash table engine maintains page metadata in the FPGA memory. FSM array supports multiple lookup requests in parallel, and a lock table is employed to ensure the correctness of those parallel lookups.

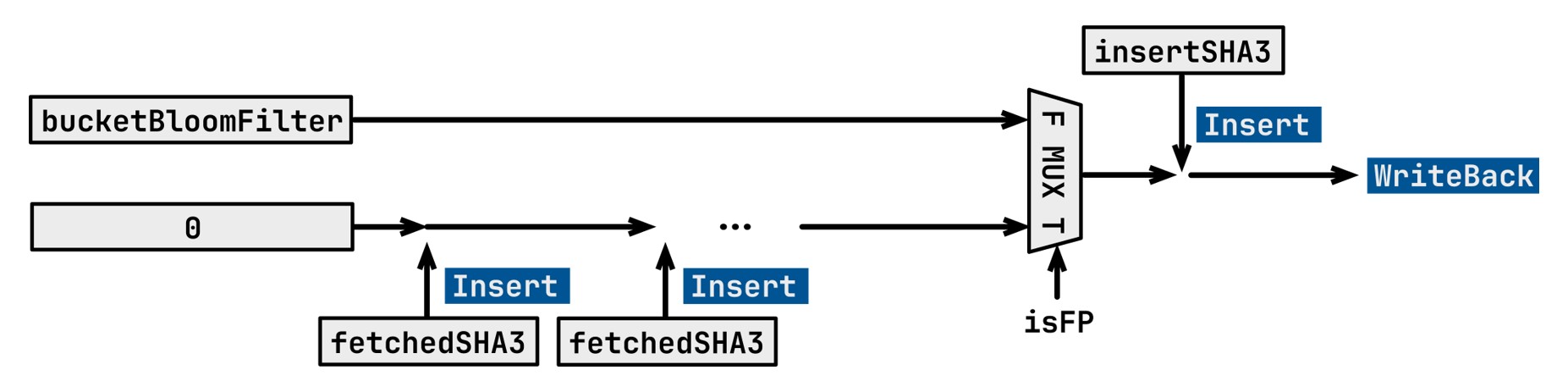

Bloom Filter Deletion Mechanism

We employ the Bloom filter in our system to quickly identify new pages to accelerate the hash table lookup further. The challenge is that the Bloom filter does not support deletion. Traditional methods use extra memory space to enable deletion(e.g., counting Bloom filter). Those methods will drastically increase the memory footprint(e.g., a 16-bit counter leads to 16x memory space).

In our system, we design a per-bucket Bloom filter (each bucket has its own Bloom filter) and a reconstruction mechanism that reconstructs the bucket’s Bloom filter when a false positive is detected in the lookup. This reconstruction mechanism does not introduce extra memory access since we look up until the end of the linked list when a false positive happens, and also has no memory space overhead.

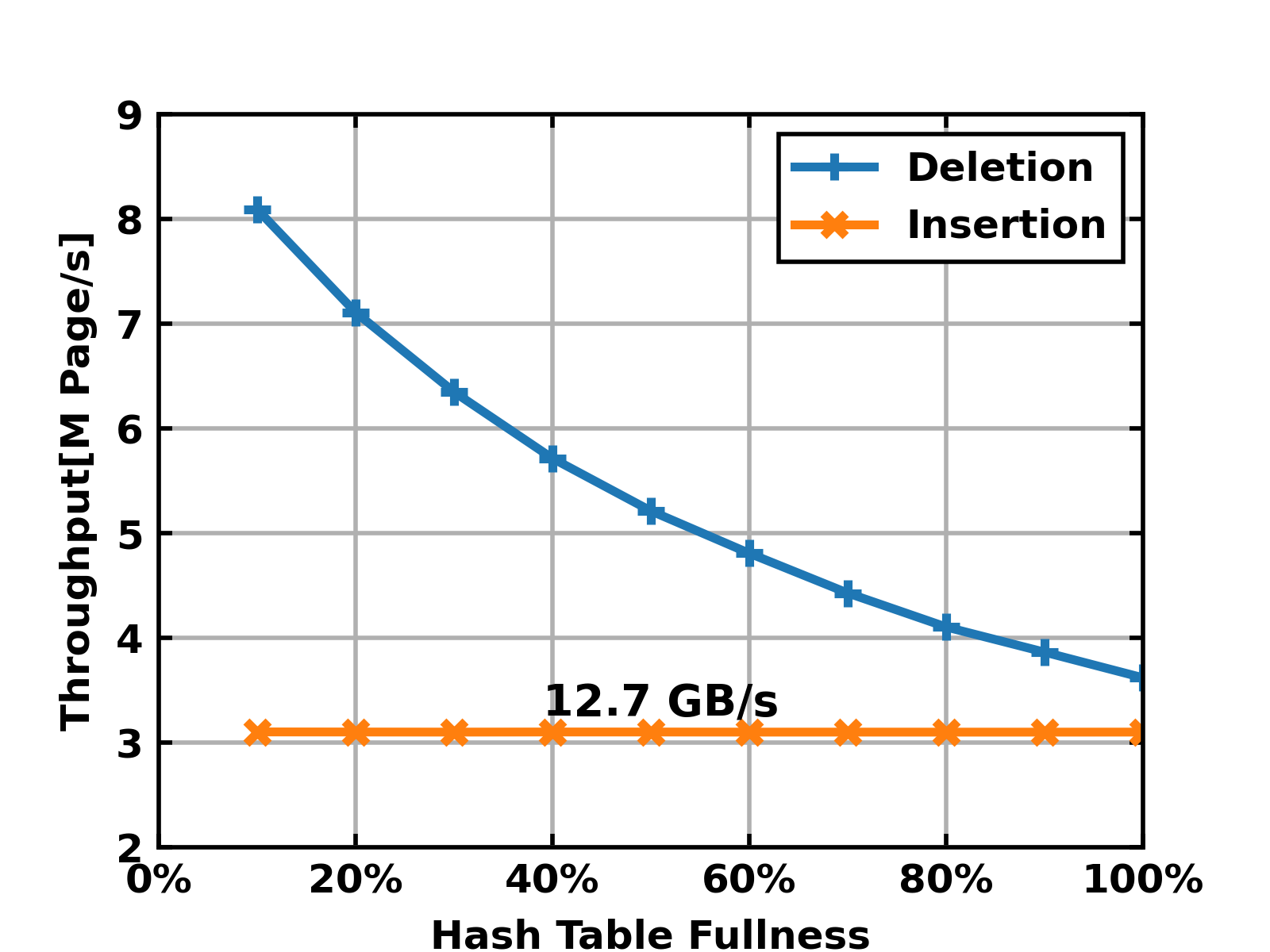

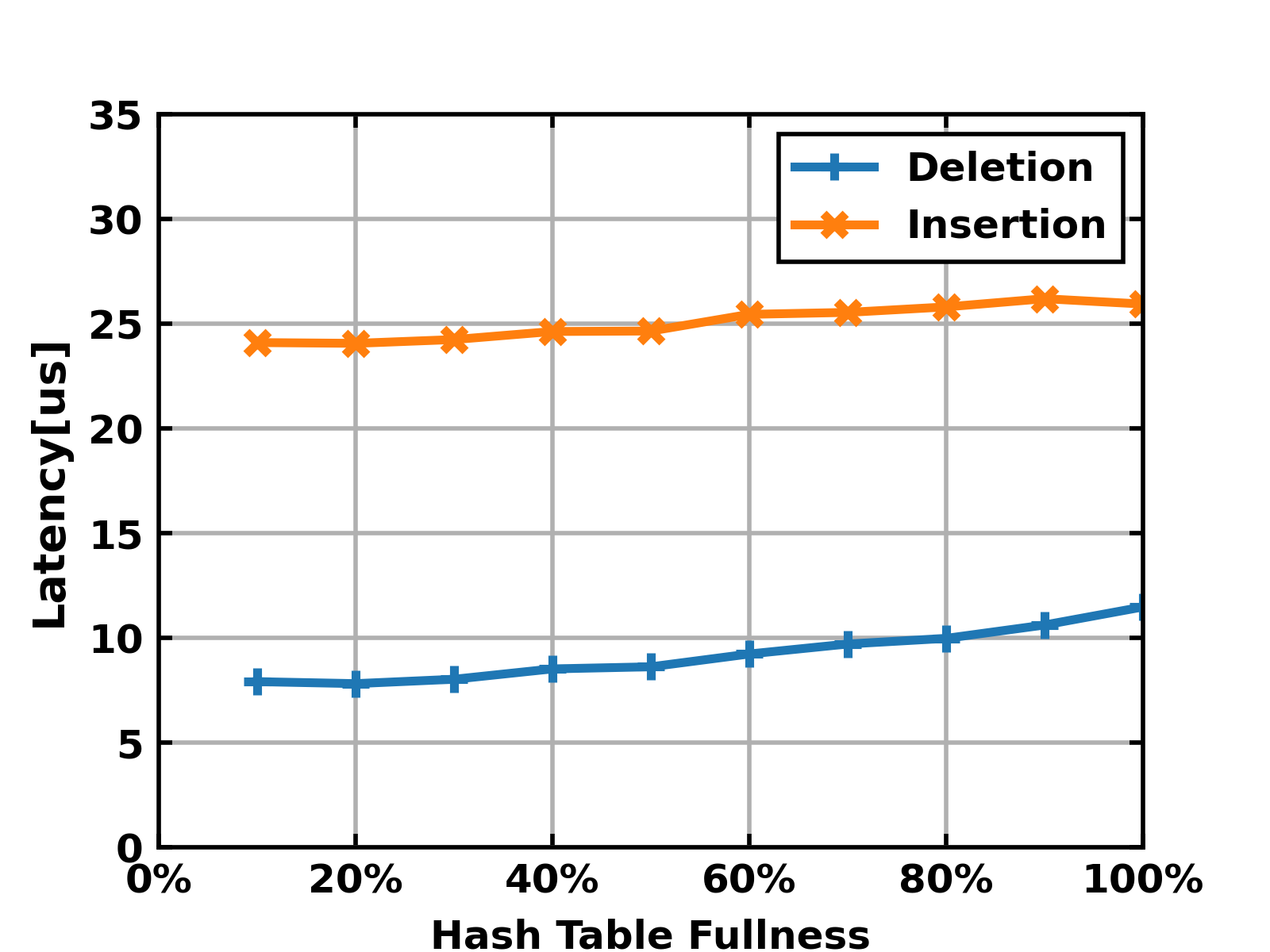

Performance

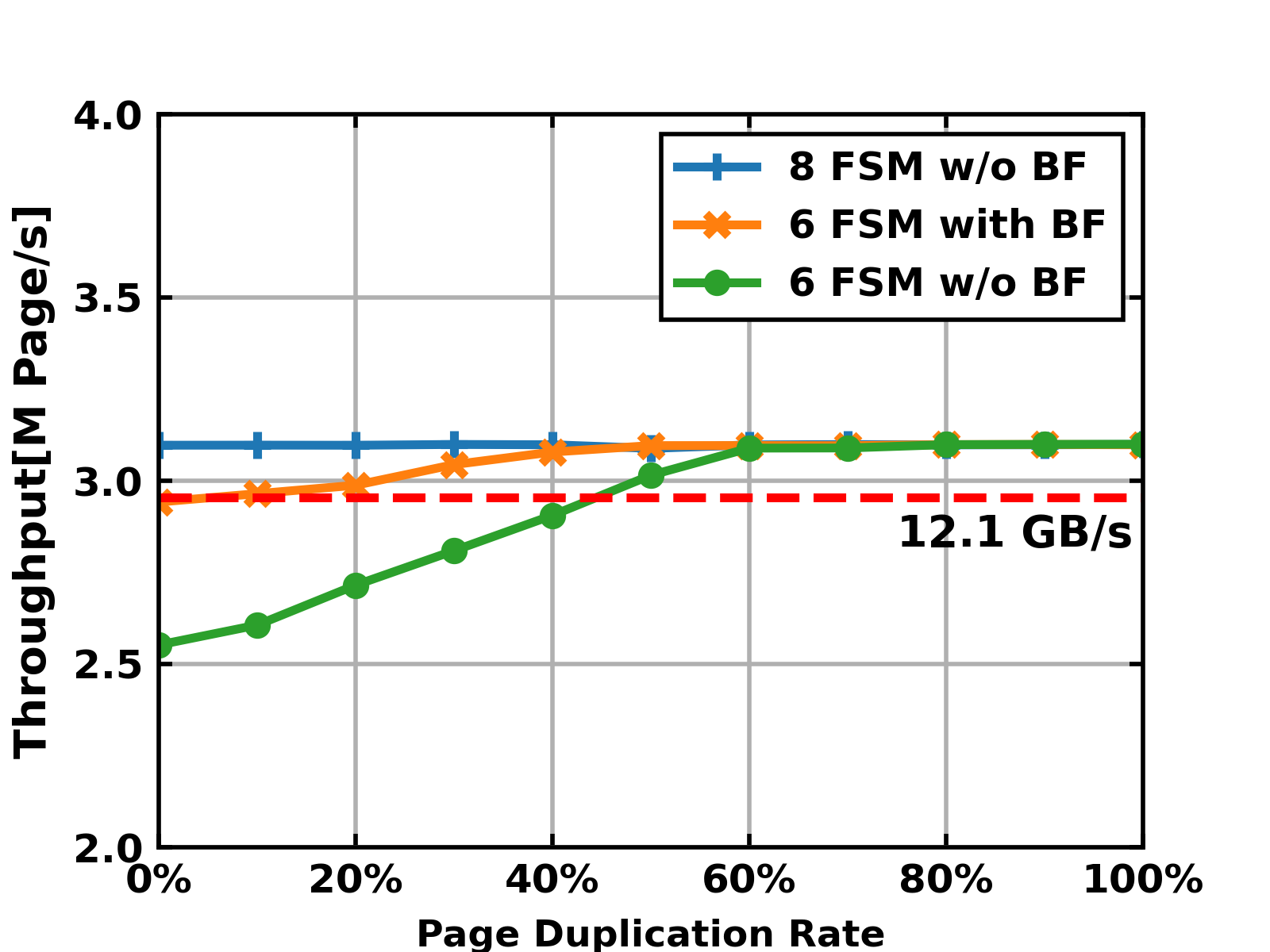

When using 6 FSMs for parallel lookup, we saturate the DMA throughput when the requests only contains existing pages. Latency is < 30 us for write requests and < 15us for erase requests.

The worst case in our system corresponds to the scenario that the hash table is almost full and all incoming pages are new, which means we always need to look up until the end of the linked list. With the help of the Bloom filter, we can still get 12.1 GB/s throughput in the worst case without increasing the number of FSM.

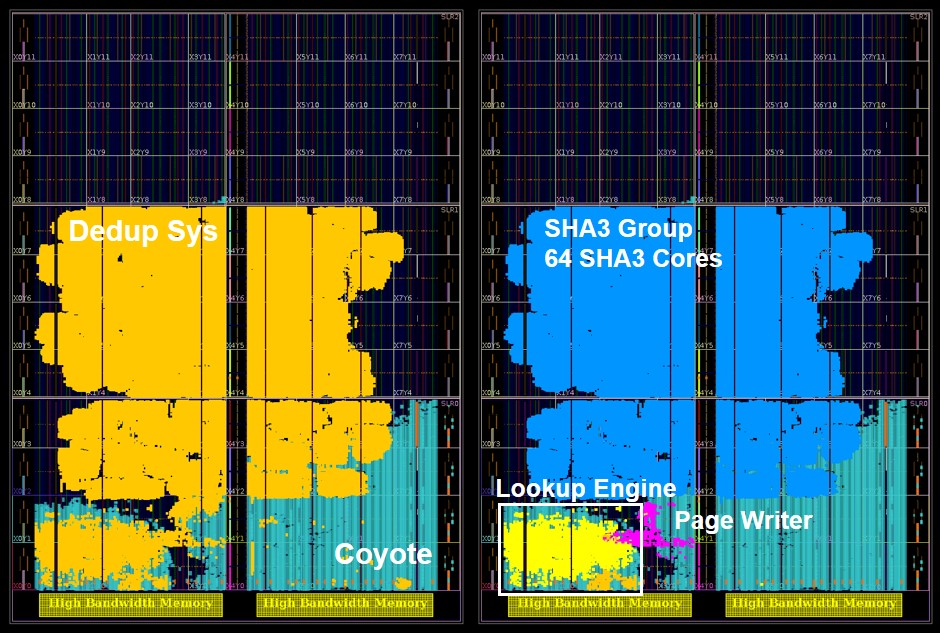

FPGA Floorplan

Our system uses roughly 2/3 of the FPGA resource, leaving enough space for other extensions.